Background

An autonomous ferry can use a variety of sensors to keep track of its surroundings. These can be categorized as active sensors (radar, lidar) and passive sensors (optical and infrared cameras). The active sensors emit radiation, and can therefore measure distance as proportional to the two-way travel time. Passive sensors cannot do this, and can therefore only provide angle measurements. Nevertheless, humans are able to navigate the world with only passive sensors, namely our eyes. One reason why this works well is that eyes come in pairs, that is, in a stereo configuration.

In contrast to the baseline between two human eyes, it is possible to mount cameras on an autonomous ferry with a baseline of several meters, enabling depth vision for distances larger than 100 meters. The full-scale autonomous ferry MilliAmpere2, currently in planning, is therefore expected to use optical stereo vision to a large extent as part of its sensor fusion system. Research papers from the autonomous driving community have argued that stereo vision can reach sufficient accuracy to replace lidars.

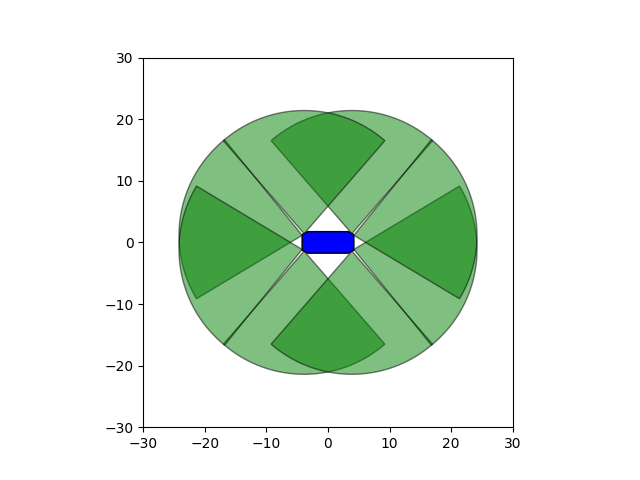

|

| Suggested camera placements on milliAmpere2. |

Scope

During the last year, two MSc students affiliated with the Autoferry project have experimented with stereo vision algorithms for the autonomous ferry application. The goal of the present project is to continue this work, and demonstrate stereo vision in the operational environment of the autonomous ferry. This can be a single-student project, or a collaboration between two or more students. The project will, at least in the early phases, use the same equipment that has been used in the on-going MSc project on stereo vision.

Proposed Tasks for the 5th year project

- Mounting of stereo equipment on milliAmpere, and integration with navigation system.

- Verification of intrinsic calibration. Extrinsic calibration against navigation system.

- Experiment: Record video of other boats with ground truth through GPS and/or lidar.

- Investigate the performance of different stereo vision algorithms with ground truth.

- Write report.

|

| Stereo imagery with extracted corner features. A calibration board can barely be seen in the lower left corners of the images. From Olsen and Theimann (2020). |

Proposed Tasks for the master thesis

The project work aims to be extended into a master thesis for the spring of 2021. Several directions are possible depending on the interest of the student.

- Multi-target and extended object tracking by means of stereo vision.

- Simultaneous localization and mapping (SLAM) by means of stereo vision.

- Stereo vision for infrared cameras.

- Integration of stereo vision in closed-loop collision avoidance experiments.

- Fusion of stereo vision with additional active and passive sensors.

- Investigation of global methods for stereo vision instead of local methods.

Contact

For more information, contact supervisor Edmund F. Brekke.

|

| The stereo vision system should work in this environment, i.e., for distances up to 100m. |

References

- Olsen, T. (2020): “Stereo vision using local methods for autonomous ferry”, Specialization project, NTNU.

- Theimann, L. (2020): “Comparison of depth information from stereo camera and LiDAR”, Specialization project, NTNU.

- Olsen, T. and Theimann, L. (2020): “Stereo vision for autonomous ferry”, MSc thesis, NTNU. In writing.